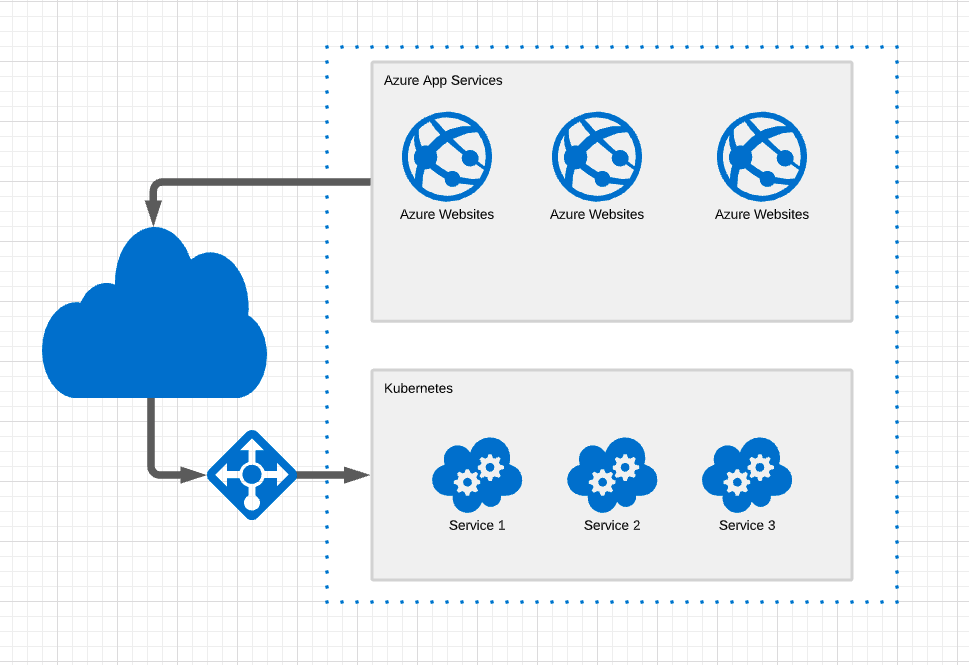

At a customer I work for we have a number of web sites running on Azure App Services. We also have a number of backend services running in a kubernetes cluster.

The backend services are all exposed out on the internet, and thus available from the web sites.

There are of course security measures in place, but exposing services that aren't meant to be used by anyone outside the organization is a risk that we don't want, if we can avoid it.

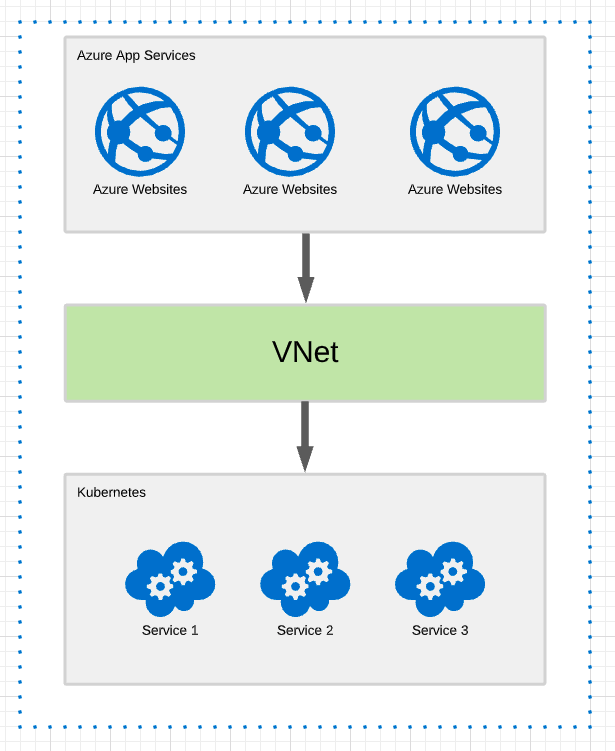

And it turns out that we can in fact avoid it, or very soon at least. Azure App Services has feature called Regional VNet Integration that will allow Azure App Services to join a VNet. As long as your VNet is in the same region as the App Service this will work.

This enable us to have a direct connection from the App Service to the services in our kubernetes cluster. If we don´t want to, our kubernetes services no longer has to be accessible at all from the public internet.

This is great as we now have made the number of attack vectors to our internal services much fewer.

So instead of the model shown above we now have:

At this level it looks pretty simple, join the VNet and everything is great, right? As it turns out there are a few hurdles that we need to pass before it all works as expected.

As we will see a kubernetes service is not immediately accessible from a VNet even if the kubernetes pods in the same cluster are.

Once we get access to the kubernetes services we also need to get a DNS service that will give us symbolic names for the services rather than raw ip addresses.

But first a bit of kubernetes networking.

Kubernetes networking

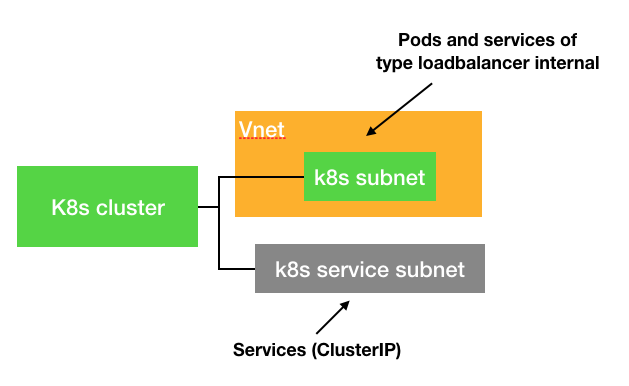

When we create our Azure AKS cluster we can do this from the Azure CLI using the az aks create command.

If we are using azure networking for our AKS cluster we will typically specify a subnet in a VNet using the --vnet-subnet-id switch. This means that the Pods in our cluster will be in this subnet.

We use the --service-cidr swith to define what addresses our services should get.

This gives us a cluster with pods that lives inside a subnet within an Azure VNet and services in a net outside the VNet.

Enable Regional VNet integration

Now we have a cluster with pods in a VNet (the yellow box in the diagram above). Subnets within an Azure VNet can by default talk to each other so if we join our Azure App Services to the same VNet we should be connected.

Start by adding a subnet to the same VNet as you have connected your kubernetes cluster to. The subnet should be a at least /27 net.

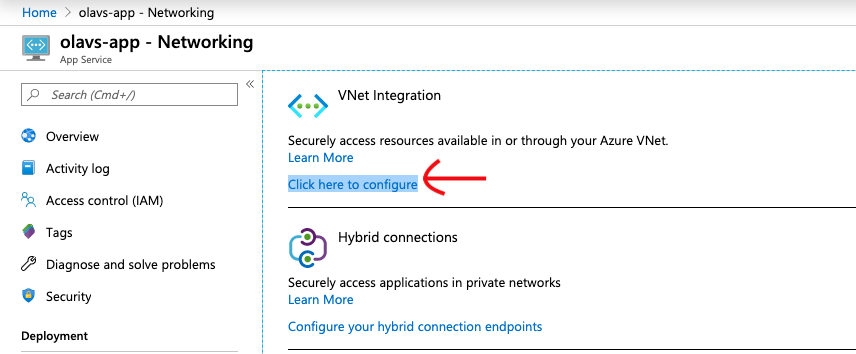

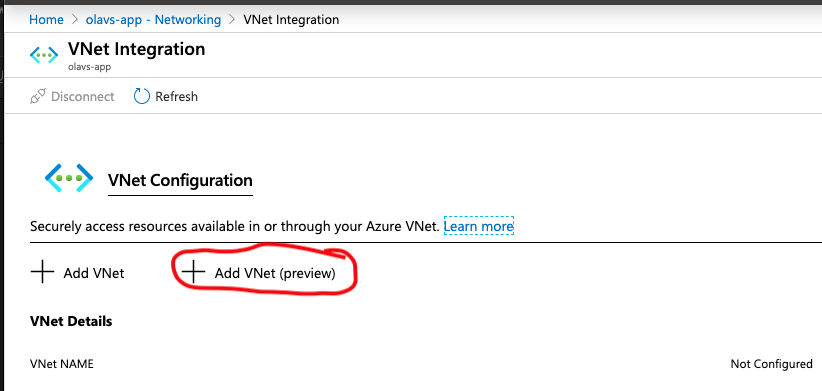

Enabling the regional VNet integration is quite easy to do in the Azure portal. Just navigate to the App service and find the "Networking" tab and click the "Click here to configure" link.

Then click "Add VNet (preview), make sure to use the right one, the preview. This is the VNet integration that doesn't need a gateway.

Select the VNet and the subnet that you have created in that VNet already and you should be all set.

Of course we only use the portal for experiments. For real deployments we script the same, in our case we use Bluefin and Fake.build for this.

You can soon use Azure CLI, but right now at the time of writing this post there is a bug in the Azure CLI implementation that stops us from using it for this particular case.

So now we have a kubernets cluster and an App Service on the same VNet, which means that we can talk directly to the Pods in the "k8s subnet".

But this far we have only enabled the App Service to talk directly to the pods using their IP addresses. At this point the DNS for the app service knows nothing about the pods, and it is not the pods we want to talk to, we always want to go through the service, not the pods.

So lets fix that.

Kubernetes networking - part 2

In the previous section on networking we had a diagram showing the cluster and two subnets. On the top one (the green one inside the yellow VNet) there was a note about it cointaining pods and services of type loadbalancer internal. Now it is time to explain what those services are.

If we deploy a default service to cluster it will be available on an IP address inside the "k8s service subnet" as shown in the diagram. This subnet is not part of the VNet and can't be reached from our App Service.

Azure AKS also support services of type loadbalancer. We get that if we add type: LoadBalancer to our service definition (yaml file).

A service of type LoadBalancer will get an external ip address and can be reached from the internet, which clearly is not want we want.

If we also add a special Azure loadbalancer annotation instructing AKS to create an internal LoadBalancer service, then Azure AKS will do what we need. It will create a service with an IP address in the green subnet (inside the VNet).

The whole yaml file should look something like this:

apiVersion: v1

kind: Service

metadata:

name: my-service

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

ports:

- port: 80

selector:

app: my-app

Now we have a service with an IP address that we can reach from the Azure App Service. But still no DNS.

Adding services in the VNet to kubernetes DNS

Newer Azure AKS clusters will be default use CoreDNS for DNS services.

kubectl get services kube-dns -o wide -n kube-system

Should display something similar to this:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kube-dns ClusterIP 10.x.y.z <none> 53/UDP,53/TCP 72d k8s-app=kube-dns

The cluster-ip will be in the services subnet (the grey subnet) and not reachable from the Azure App Service.

But we can use the same trick as we did with the pods. We simply create a new service using type: LoadBalancer the annotation and finally we use the same selector as the default kube-dns service: k8s-app=kube-dns and then we get a service with an IP address in the VNet (the green subnet).

Now if we try to resolve one of our services using the e.g. nslookup like this inside our cluster:

nslookup my-service.default.svc.cluster.local

We will see that this will resolve the CLUSTER-IP which is an address in the grey subnet, which is not what we want.

We want the EXTERNAL-IP which is an address in the green subnet.

CoreDNS plugins

We need to configure CoreDNS to also include the external ip addresses for services.

Fortunately CoreDNS has a built in plugin for this, named k8s_external, and AKS supports all built in plugins for CoreDNS.

We configure CoreDNS by adding a kubernetes ConfigMap that needs to be named coredns-custom. This ConfigMap should have a key named something.override.

To enable the k8s_external to include the external IP addresses in a domain named mydomain.local the complete configmap should look like this:

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns-custom

namespace: kube-system

data:

test.override: |

k8s_external mydomain.local

Now we have kubernetes services in our cluster with IP addresses in the VNet and they can be resolved using a DNS that is also available on an IP address in the same VNet.

The external ip address (the address in the green subnet) can be resolved inside the cluster using the mydomain.local domain like this:

nslookup my-service.default.mydomain.local

But still, our App Service does not use the DNS server located in the "k8s subnet" in the VNet.

Setting a custom DNS Server for an Azure App Service

Luckily the final part of the puzzle, getting the App Service to use the CoreDNS DNS server in the cluster is very easy.

We just need to go to configuration settings of the Azure App Service and set a variable named WEBSITE_DNS_SERVER to point to the "EXTERNAL-IP" of the DNS service we configured in the section above ("Adding services in the VNet to kubernetes DNS").

If we want we can have a backup DNS if we also set WEBSITE_ALT_DNS_SERVER.

That's it. We now have direct access from our Azure App Service to our AKS Kubernetes cluster.