This is a semi-scientific research project based on a cool technical solution. Clearly the best way to do research.

It all started when my colleague Tom Einar showed me a simple demo of the Emotion API, a part of Microsoft Cognitive Services (previously the Oxford Project). Instantly I found the API both funny, and very easy to use. Endless possibilities for creating a funny, viral app!

Since time is limited, as always, I decided to combine two funny things someone else made into something really cool in as few lines of code as possible.

The idea

Let the Emotion API evaluate an image of a face without a beard, then use the Wurstify API to draw a perfect beard on the image, and again evaluate it through the Emotion API. My hypothesis was simple:

"People are happier with beards".

Getting started with the Emotion API

It is easy to get startet, but your need to do a few things:

The subscriber key

First you need to sign up for cognitive services and get the subscriber key. You can have a lot of fun with the FREE plan, so that is fine for this project.

Testing the API

You can now go to the Cognitive Services APIs documentation and test the Emotion API in the API reference.

Simply input your subscriber key, and set the url to the image you want to evaluate:

Push the "Send" button, and you will get the JSON-result including both the coordinates of the rectangle where a face was found, and the scores it got for each emotion:

[

{

"faceRectangle": {

"height": 182,

"left": 203,

"top": 125,

"width": 182

},

"scores": {

"anger": 3.440062E-07,

"contempt": 0.004647813,

"disgust": 8.61395364E-08,

"fear": 2.54636451E-10,

"happiness": 0.679269433,

"neutral": 0.316078871,

"sadness": 3.38570362E-06,

"surprise": 9.86783064E-08

}

}

]

Notice that most of the scores are really small number written with an exponent (E-n), so in the example over "happiness" and "neutral" is clearly the most relevant emotions in the image.

Installing the Nuget package

You don't have to study the API reference in detail, there is a Nuget package with a ready to use client SDK. The package uses the old name of the project, Microsoft.OxfordProject.Emotion.

There are also some examples on using the SKD on github, but these are for C#/XAML, Android, and Python. I wanted to build a Web API to use in my application, so i just installed the nuget package.

With the package installed I could create a simple method to get the emotions from a given image url. To keep it simple I assumed only one face in the image, and selected the first result:

That's the basics. We are now ready to do some research!

Time to experiment

With everything ready, it was time to put the idea and my hypothesis to the test!

Are people really happier with beards?

First I needed a model for the result, including the original image with emotions, the bearded image with emotions, and a simple calculation of the change for each emotion. I also included a property to forward error messages from the Emotion API.

Then I created a simple web api controller to perform the analysis. The AnalyzeImage action perform the following steps:

- Set original image, and get image url with beard

- Get emotions for original image

- Get emotions for bearded image

- Calculate changes in emotions

Now i could run tests on the API, and i figured the perfect candidate for this would be our friend Joey.

How you doin'?

So I posted the image to the API, and got the following result:

{

"ErrorMessage": null,

"OriginalImage": "http://blog.novanet.no/content/images/2016/04/Matt_LeBlanc-2.jpg",

"OriginalScores": {

"Anger": 8.380138e-8,

"Contempt": 0.0005900293,

"Disgust": 2.08110915e-8,

"Fear": 1.18491536e-10,

"Happiness": 0.806948,

"Neutral": 0.19246006,

"Sadness": 0.00000173152262,

"Surprise": 3.749145e-8

},

"BeardedImage": "http://wurstify.me/proxy?since=0&url=http://blog.novanet.no/content/images/2016/04/Matt_LeBlanc-2.jpg",

"BeardedScores": {

"Anger": 0.00289123366,

"Contempt": 0.1066678,

"Disgust": 0.000363111729,

"Fear": 0.00000708313064,

"Happiness": 0.0526458472,

"Neutral": 0.8270101,

"Sadness": 0.0103803733,

"Surprise": 0.0000344286454

},

"EmotionChanges": [

{

"Emotion": "Neutral",

"Change": 0.634550035

},

{

"Emotion": "Contempt",

"Change": 0.106077775

},

{

"Emotion": "Sadness",

"Change": 0.010378642

},

{

"Emotion": "Happiness",

"Change": -0.754302144

}

]

}

There are major changes in the emotions "Neutral" and "Happiness".

Ok, so Joey seems to be more neutral and less happy with the beard. Could I really be that wrong? The bearded image show that the beard really was added to his face. This is a shocking result!

Me: How you doin' with a beard, Joey?

Joey: Meh...

To simplify further testing, I created a simple Angular app on top of the API, and ran several tests, but most of the tests show similar results.

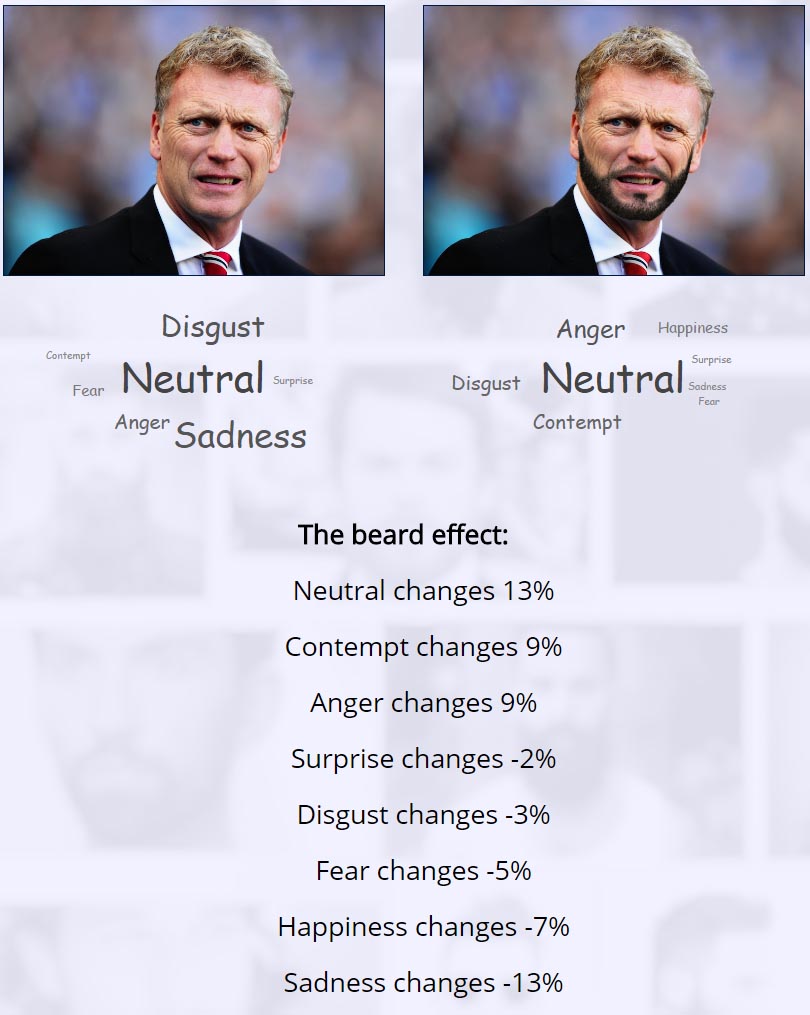

Testresult for "Sad manager"

There is a lot of emotions going on here, but overall he hide them better with the beard.

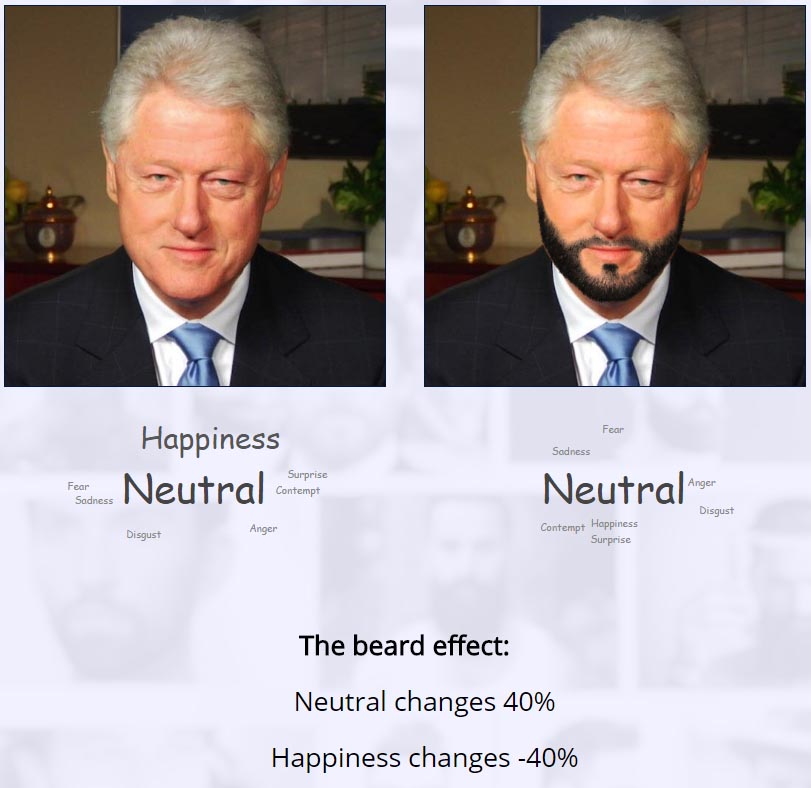

Testresult for "Happy president"

You can kind of see it really. He is clearly happier without the beard.

Conclusion

I tested on more images than I can count on the fingers of my hands, and the conclusion is clearly that beards hide your emotions. It works both ways, you seem less happy, but also less angry or sad.

I deployed the app, so you can test your own image:

PS: It is running on the free plan, so if it gets popular it is probably going to be busy.